Sonic Kayak development

Made by FoAM-Kernow, the Sonic Kayak is an on water development of the Sonic Bike. It carries underwater environmental sensors to generate music live from the marine world, providing the paddler with an extra dimension to experience the underwater climate whilst enabling citizens to gather climate / environmental data. The kayaks also explore sonic navigation experiences for vision diverse paddlers by using new satellite developments to provide greater locational accuracy on the open water.

2020

Nov 2020: The Sonic Kayak team have written a journal paper on BioRxiv ‘New water and air pollution sensors added to the Sonic Kayak citizen science system for low cost environmental mapping’ Read the paper here.

Oct 2020: Read the latest article explaining the Sonic Kayak’s developments, published in the ‘MagPi’ Raspberry Pi magazine.

September 2020: ‘Sonic Kayak update – new sensors, sonifications, and visualisations‘ from FoAM Kernow

June 2020: The latest update from FoAM Kernow – ‘Sonic Kayak environmental data sonification‘

Spring 2020

Despite COVID, we have been lucky enough to continue this project through virtual sonification labs with Kaffe in Berlin and FoAM-Kernow (Dave Griffiths & Amber Griffiths), in Cornwall.

Supported by ACTION European funding, FoAM-Kernow are directing the research to design and test two new sensors for the Sonic Kayaks;

1) air pollution and

2) water turbidity (cloudiness). With the kayaks already carrying an underwater temperature sensor and hydrophone, the data collected through all sensors is recorded for scientific open data bases as well as being sonified live to the paddler through a stereo speaker mounted on the kayak. Sound from the hydrophone is also present with of course, the ambient paddling, water and wind sounds adding to the experience.

This project is creating a new scientific tool for live field research of underwater environments, whilst creating opportunity to make new marine compositions exploring ecoacoustics and sonification.

FoAm have been leading on the tech and sensor application – adapting the Sonic Bike kit for the kayak, making custom sensor housings and developing the software. MORE..

Kaffe – with PD support from Federico Visi together in Berlin – are working alongside exploring data sonification techniques by looking at granular synthesis of animal calls and insects, vocal synthesis, and triggering of tones and samples through data interpretation as peaks and troughs/rise and fall to make ‘states’. Recording and sharing their experiments to each other, so they are continuously coming back to the question, will these audio outcomes communicate clear meaning to the paddler ? as well as enhancing, not destroying the peaceful experience that is the sonic kayak out on open water.

Sonification

Data Treatment:

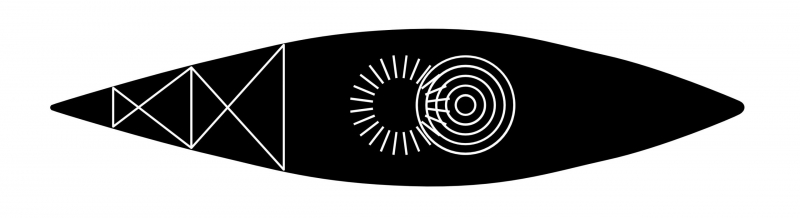

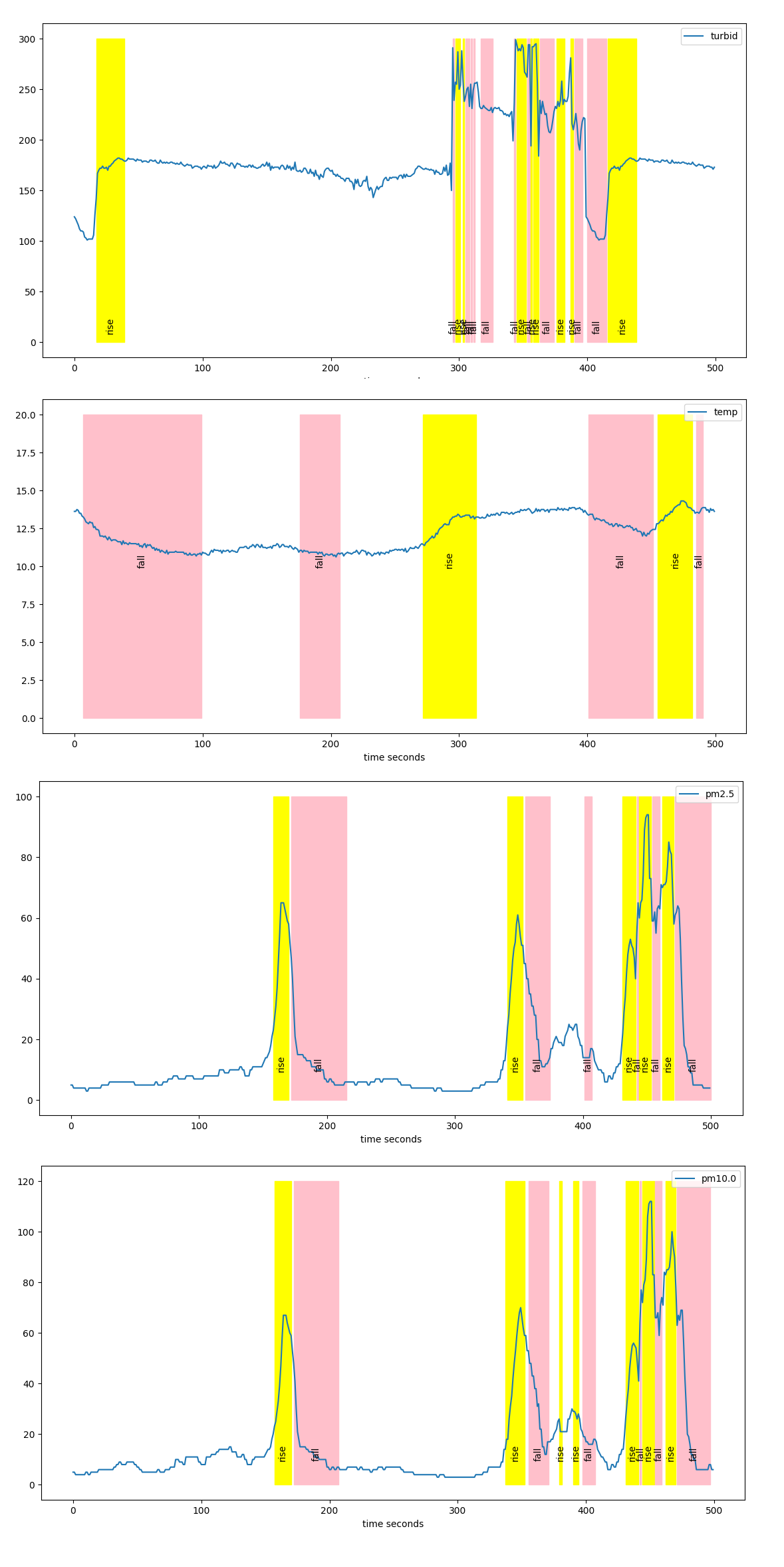

One approach is to make ‘states’ to be able to work more meaningfully with live data pouring in as continuous streams. This can also be used to enable analysis by re-examining recorded data, then magnifying relevant active data ranges to create clearer sonic responses and hiopefully more meaning. Test ‘states’ are; Rise, Peak, Fall and Trough

As it’s impossible to get on the water during COVID times, we are working with air quality data recorded by walking near water. The graph above shows two major peaks, the first was a passing lorry belching smoke and the second, a house with a log burner.

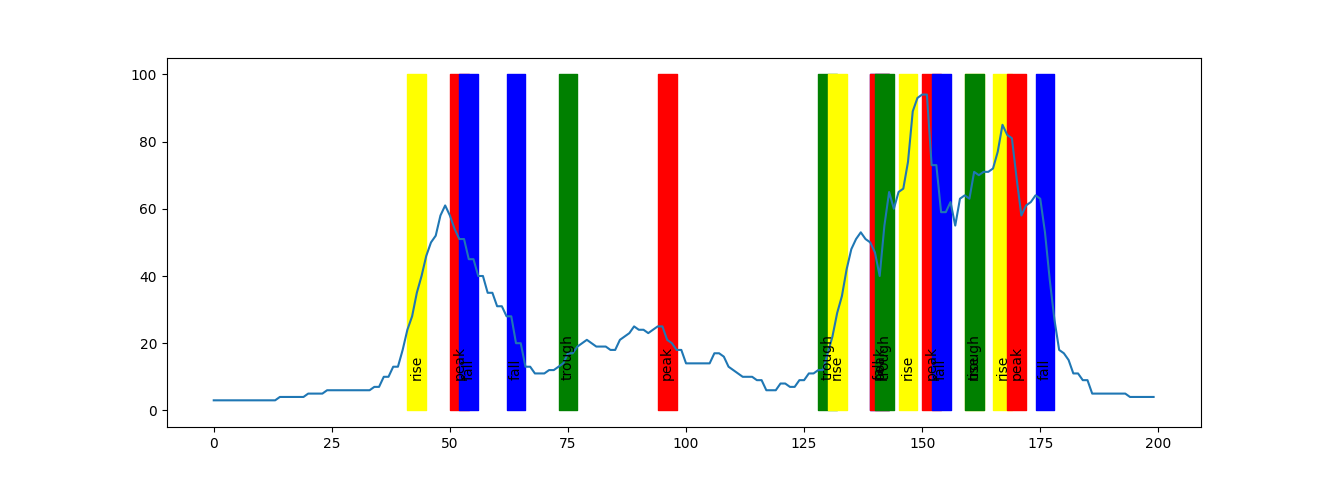

This graph shows the same data over a longer period of an hour, which would produce long periods of silence as nothing significant is happening.

Note that data ‘states’ are slightly delayed, as you don’t know you’ve had a peak until it has fallen slightly (reverse for troughs) – and we can’t read data from the future. The below graphs other ways of assessing the ‘states’.

Sound:

Kaffe has been exploring a number of options for sound, working from recordings of above water life – dragonflies, insects and bats (everyone agreed no birds) for air pollution response, and a selection of frogs and toads for turbidity response. With a range of synthesized dongs, tones and digial pulses, so a breadth of material is collectively selected and set in PD to be processed or triggered by the incoming data streams /states.

Approaches for testing so far:

Four different approaches to chosen sounds and data treatments were finally selected as follows :

- Recognisable vs Processed – PD granulation + filtration of animal sounds via data streams; stretching, shattering and exploding to new audioworlds ->unrecognizeable as animals.

- Just Animals – ‘ states’ triggering selected animal sounds, higher/lower states raising /lowering pitches. (Air quality – dragonflies; Turbidity – frogs; Water Temp – tom Drum )

- Simple synthesized sounds – Air quality – digital pulse; Turbidity – synthesis ‘dong’; Water Temp – bending sine tone. Triggered by states whose value causes filtration.

- Synthesized vocal readout – a verbal description of the data from ‘states’, eg. ” water temperature is rising, water is clear, getting cloudy, air is more polluted etc” (in both male+female voices)