Sonification 9 – finding the ranges – ah timetime

Dave has sent this PD patch that sends the 3 streams of data, temperature, turbidity and particulate matter out as states. ie. we are also no longer receiving 3 continuous streams of data as the uploaded audio mix in the previous post was a product of. Now we need to build one modular patch that will respond to individual data states at the same time, whilst also directing the separate states to the appropriate sounds – drum sound in LFO/phasor, dragonfly in one JAG and frogs in the other. To a PD amateur as myself this is a huge task, but Federico grins and disappears for a week before then presenting a brilliantly functional and modular MoSoLab.

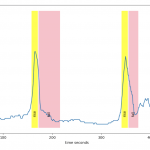

Of course it’s then relatively easy to get it playing with our sounds, but how to we get it to shape them from these new data states across a range of meaningful as well as sonically interesting outcome? [ Note also the two data states sent only run for 1 minute and then 2 minutes. ]

Well. This is where the studio immersion comes in as it’s a question of finding the range of different parameters ( in the JAGS its all in the offsets) and balancing them to the ranges of the data which is much more of a patient and in depth fiddling than you might imagine. So much learning ..